Introduction

In many operations, it is desirable to exploit the force capabilities of industrial robot-manipulators by directly combining them with the skills and incomparable sensomotoric abilities of a human operator for complex assembly tasks. However, traditional robot reprogramming or switching between robot programs many times during a hybrid human-robot cooperative assembly scenario is not applicable. Therefore, efforts should be allocated to introduce more degree of autonomy and intelligence in such industrial settings such as automatic object recognition and corresponding grasping algorithm engagement. Presumably, this will lead to reduced time involvement of robot operators in reprogramming the robots during these operations.

Human motion tracking systems are widely used in several application areas, such as teleoperation of robot manipulators, human-robot interaction, medical diagnostics, video entertainment, virtual reality and animation, navigation, and sport. To make the use of arm tracking systems freely available to academic community, we developed an novel open-source design concept of a wearable 7-DOF wireless inertial system for a full human arm motion tracking. Unlike the classical inertial motion tracking systems with three IMUs, the presented design utilizes a hybrid combination of two IMUs and one potentiometer. The reduced number of IMUs in the proposed hybrid system design allows to achieve reasonably accurate tracking performance, sufficient for facilitating the research activities on industrial robot-manipulator teleoperation.

Phase 1

As a first step of this research, a novel low-cos 4-DOF wireless human arm motion tracker has been developed. The preliminary design utilizes a single inertial measurement unit coupled with an Unscented Kalman filter for the upper arm orientation quaternion and a potentiometer sensor for elbow joint angle estimations. The presented arm tracker prototype implements wireless communication with the control PC for sensor data transmission and real-time visualization using a Blender open source 3D computer graphics software and was verified with an Xsens MVN motion tracking system.

Phase 2

In the second design stage, an updated double arm 7-DOF version of the previously developed human arm motion tracker was developed.

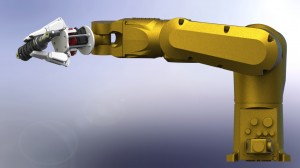

The developed arm motion tracking system prototypes were applied for experimental evaluation of real-time teleoperation control frameworks implemented on an UR5 6-DOF industrial robot-manipulator operated from a control PC through a custom-made TCP/IP communication driver. The robot end effector followed the human operator wrist pose (position and orientation) captured using the 7-DOF arm motion tracking system in the tracker reference frame and translated to the base frame of the UR5 robot with an appropriate scaling, reflecting the user’s arm and robot link dimensions, and an offset in the z-axis direction equal to half

the length of the robot link. Initial experiments were conducted using the Phase 2 prototype for direct open-loop and various closed loop control algorithms of the robot. As a result, a new UR robot real-time explicit model predictive control (EMPC) approach was proposed that ensured smooth motion of the teleoperated manipulator, as presented in detail in [2].

Phase 3

The final 7-DOF arm motion tracking system prototype was developed using a modular approach. The system design employs off-the-shelf inexpensive electronic components and 3D printing technology for prototyping and allows to implement available open-source or self-developed data fusion algorithms, ensuring low cost and straightforward replicability of the system prototype, which can be easily utilized in mechatronic system design oriented activities. The system design is presented in detail in work [3].

The developed system prototype was used in experimental work on teleoperating a UR5 robot equipped with a Robotiq 3-finger adaptive gripper and controlled using a newly formulated nonlinear model predictive control (NMPC) system, that allowed the manipulator end effector to track the operator’s wrist pose in real-time and, while satisfying at the same time joint velocity and acceleration limits and avoiding singular configurations and workspace constraints, including obstacles. The NMPC was integrated into the Unity Engine and, afterwards in ROS, and proved to be computationally effective for realizing real-time robot control.

The developed NMPC based robot teleopeation framework was extended to the semi-autonomous robot teleoperation with obstacle avoidance. The detailed formulation of the NMPC control framework and its mplementation for the case study of the UR5 robot semi-autonomous teleoperation with obstacle avoidance is reported in [3].

The 3D design models of the system module cases and module holders, created using the SolidWorks CAD software, and program code are made freely available for downloading from the lab GitHub page https://github.com/alarisnu/7dof_arm_tracker . The simple system design released for public use can be easily reproduced and modified by robotics researchers and educators as a design platform to build custom arm tracking solutions for research and educational purposes. Please cite the below work if you utilize our arm tracker design in your academic work:

A. Shintemirov, T. Taunyazov, B. Omarali, A. Kim, A. Nurbayeva, A. Bukeyev, M. Rubagotti, An Open-Source 7-DOF Wireless Human Arm Motion Tracking System for Use in Robotics Research, Sensors (MDPI), 2020, Sensors, 20, 3082, 2020 pdf

Project Publications

- T. Taunyazov, B. Omarali, A. Shintemirov, A Novel Low Cost 4-DOF Wireless Human Arm Motion Tracker System, 6th IEEE RAS & EMBC International Conference on Biomedical Robotics and Biomechatronics (BioRob2016), Singapore, 2016 IEEE Xplore

- B. Omarali, T. Taunyazov, A. Bukeyev, A. Shintemirov, Real-Time Predictive Control of an UR5 Robotic Arm Through Human Upper Limb Motion Tracking, The 2017 ACM/IEEE International Conference on Human-Robot Interaction (HRI2017), Austria, 2017 ACM DL pdf

- A. Shintemirov, T. Taunyazov, B. Omarali, A. Kim, A. Nurbayeva, A. Bukeyev, M. Rubagotti, An Open-Source 7-DOF Wireless Human Arm Motion Tracking System for Use in Robotics Research, Sensors (MDPI), 2020, Sensors, 20, 3082, 2020 pdf

- M. Rubagotti, T. Taunyazov, B. Omarali, A. Shintemirov, Semi-Autonomous Robot Teleoperation with Obstacle Avoidance Via Model Predictive Control , IEEE Robotics and Automation Letters, vol. 4(3): 2746 – 2753, 2019 IEEE Xplore pdf

- M. Rubagotti, T. Taunyazov, B. Omarali, A. Shintemirov, Semi-Autonomous Robot Teleoperation with Obstacle Avoidance Via Model Predictive Control, The International Conference Robotics: Science and Systems, June, 2019, pdf